简介

记录研究卡通渲染时的内容

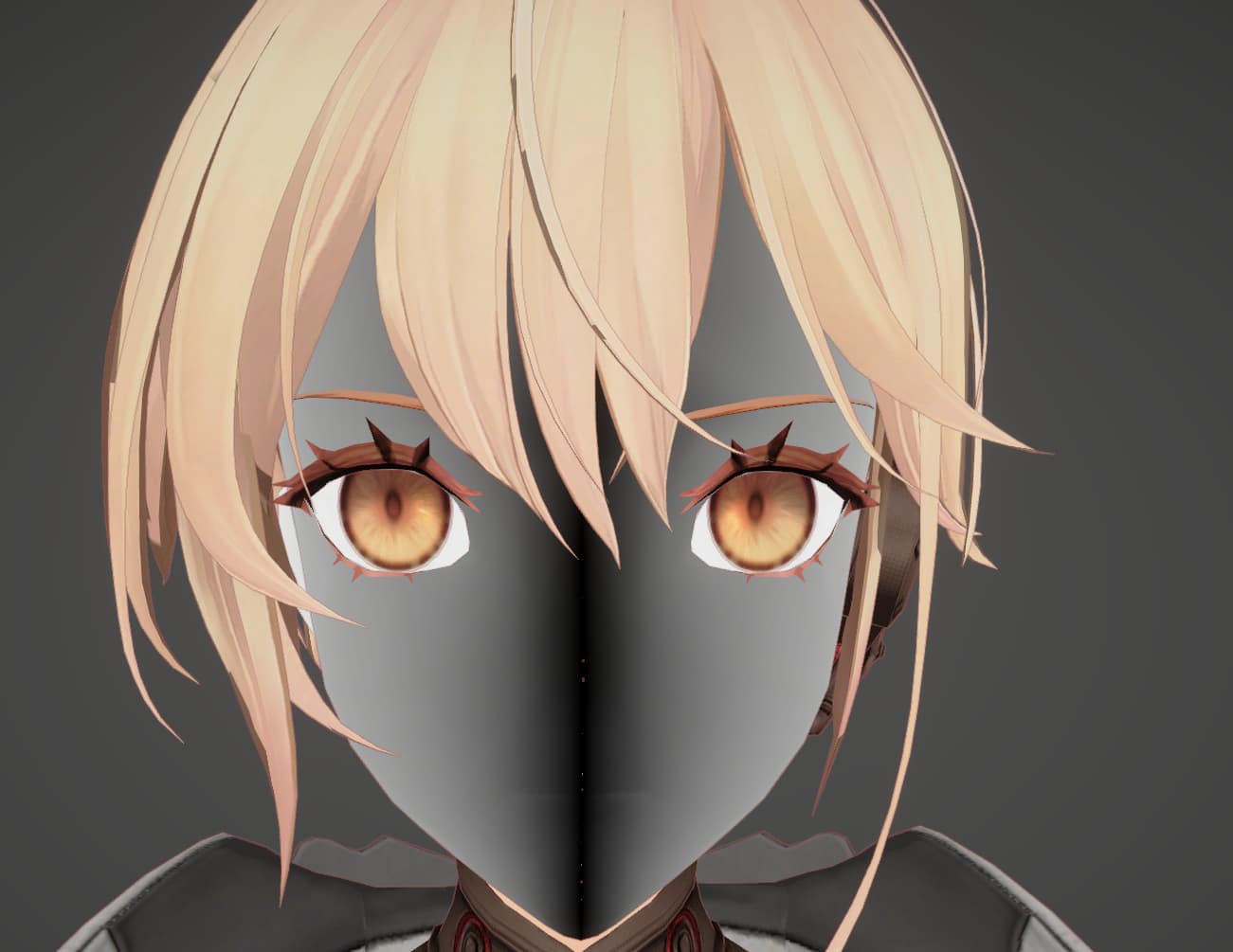

眼睛

视察映射实现眼睛的凹凸感

1 |

|

环境反射

高光

在shader中结合Flipbook播放

Matcap

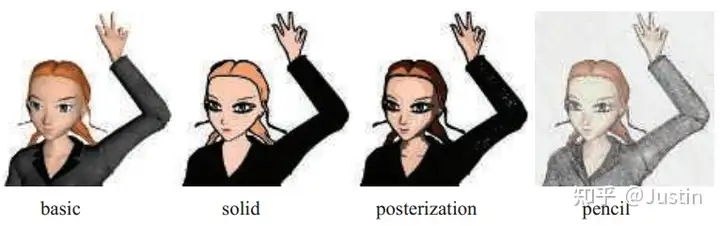

Matcap是一种材质渲染技术,它通过采样一张预计算的材质图片(Matcap texture)来达到渲染材质的效果。

Matcap texture是一张包含材质信息的2D纹理图,通常采用球形映射生成。Shader会根据片元法线在Matcap texture上采样,获取对应的材质颜色,从而实现渲染效果。

Matcap采样的基本步骤是:

- 将片元法线(法线贴图或顶点法线)转换到观察空间。观察空间的z轴代表视线方向

- 将观察空间法线映射到[-1,1]的范围内。这可以通过除以法线的长度实现

- 将坐标轴旋转到z轴对准视线方向,x轴右,y轴上。这完成了从观察空间到Matcap空间的转换

- 颜色采样

- 得到的RGB颜色值即为片元的Matcap颜色值。可以直接输出或与其他颜色值混合使用

Matcap采样

1 | inline half3 SamplerMatCap(half4 matCapColor, half2 uv, half3 normalWS, half2 screenUV, TEXTURE2D_PARAM(matCapTex, sampler_matCapTex)) |

1 | half3 normalVS = mul((float3x3)UNITY_MATRIX_V, normalWS); |

将法线从世界空间转换为视角空间,可以使用 TransformWorldToView 替代

1 | half3 normalVS = TransformWorldToView(normalWS); |

进行相机的偏移

1 | float3 NormalBlend_MatcapUV_Detail = viewNormal.rgb * float3(-1,-1,1); |

通过权重值,控制过渡

1 | return lerp(surfaceData.matcapCol,surfaceData.matcapCol*surfaceData.albedo,_MatCapAlbedoWeight); |

焦散

做在贴图,或者根据距离做Mask

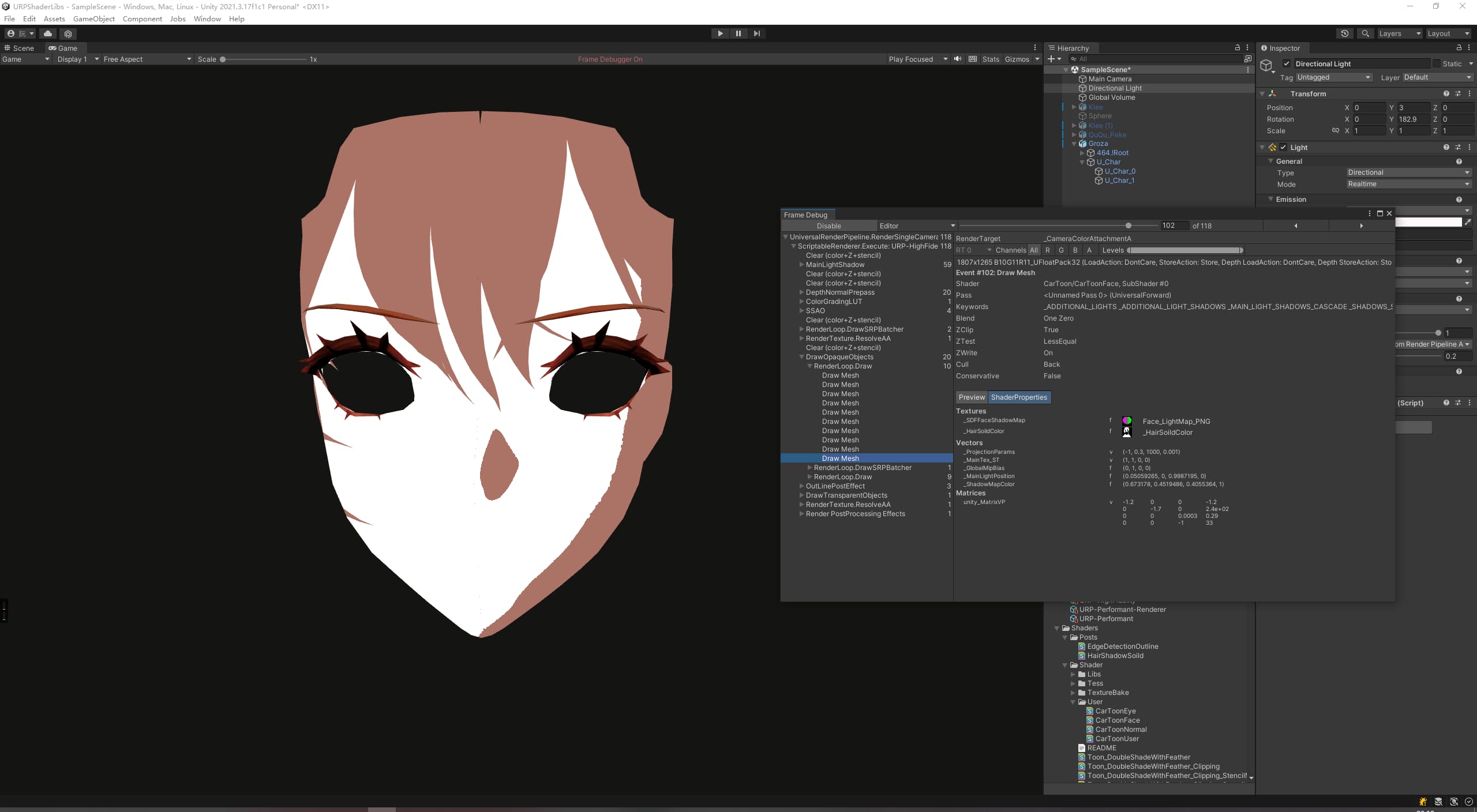

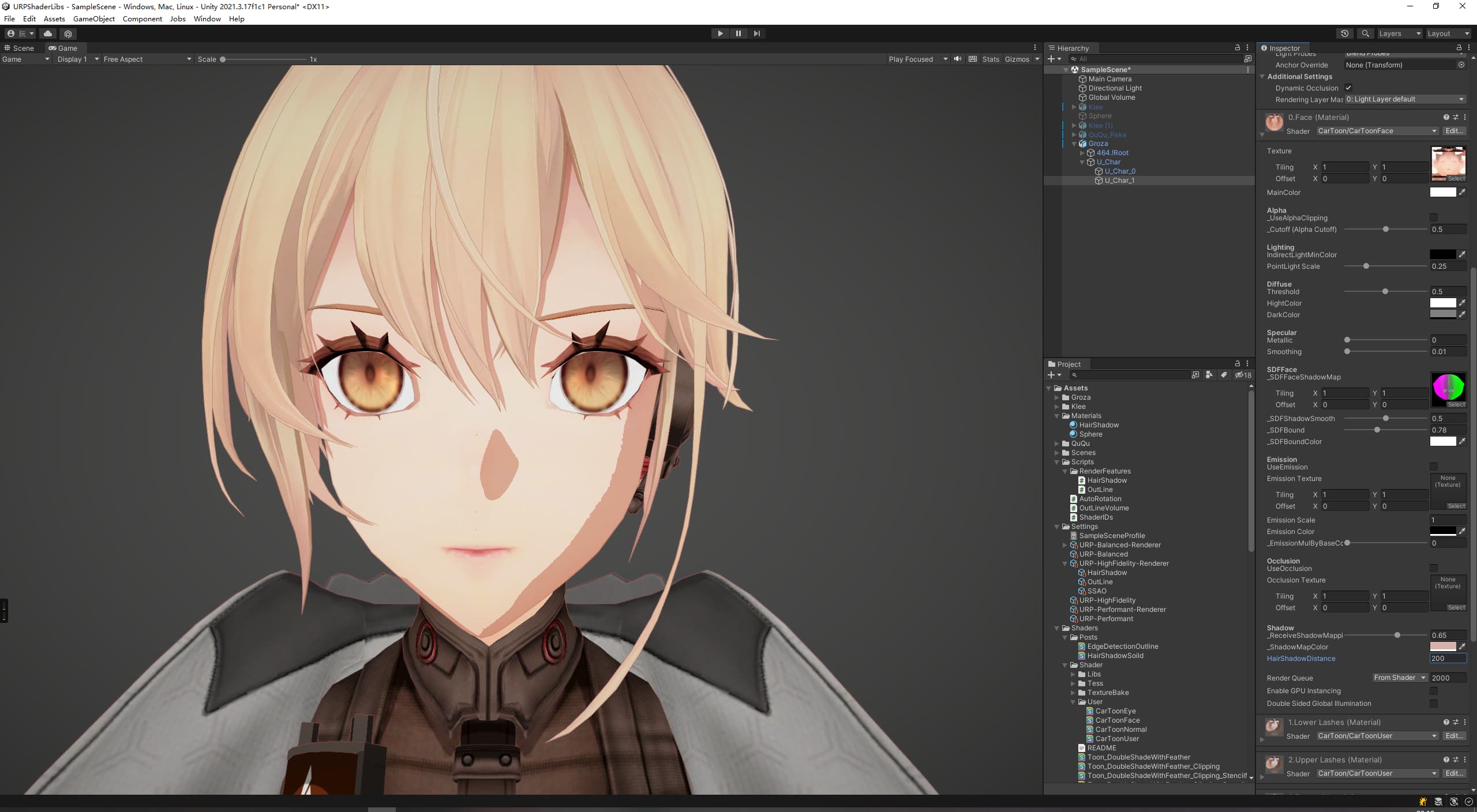

脸

SDF

记录下遇到的坑

这种计算要求脸部的uv是从0-1,但是用的模型,脸部uv是从0.5-1

将uv进行*2-1处理后的结果

修改后的结果

SDF计算

1 |

|

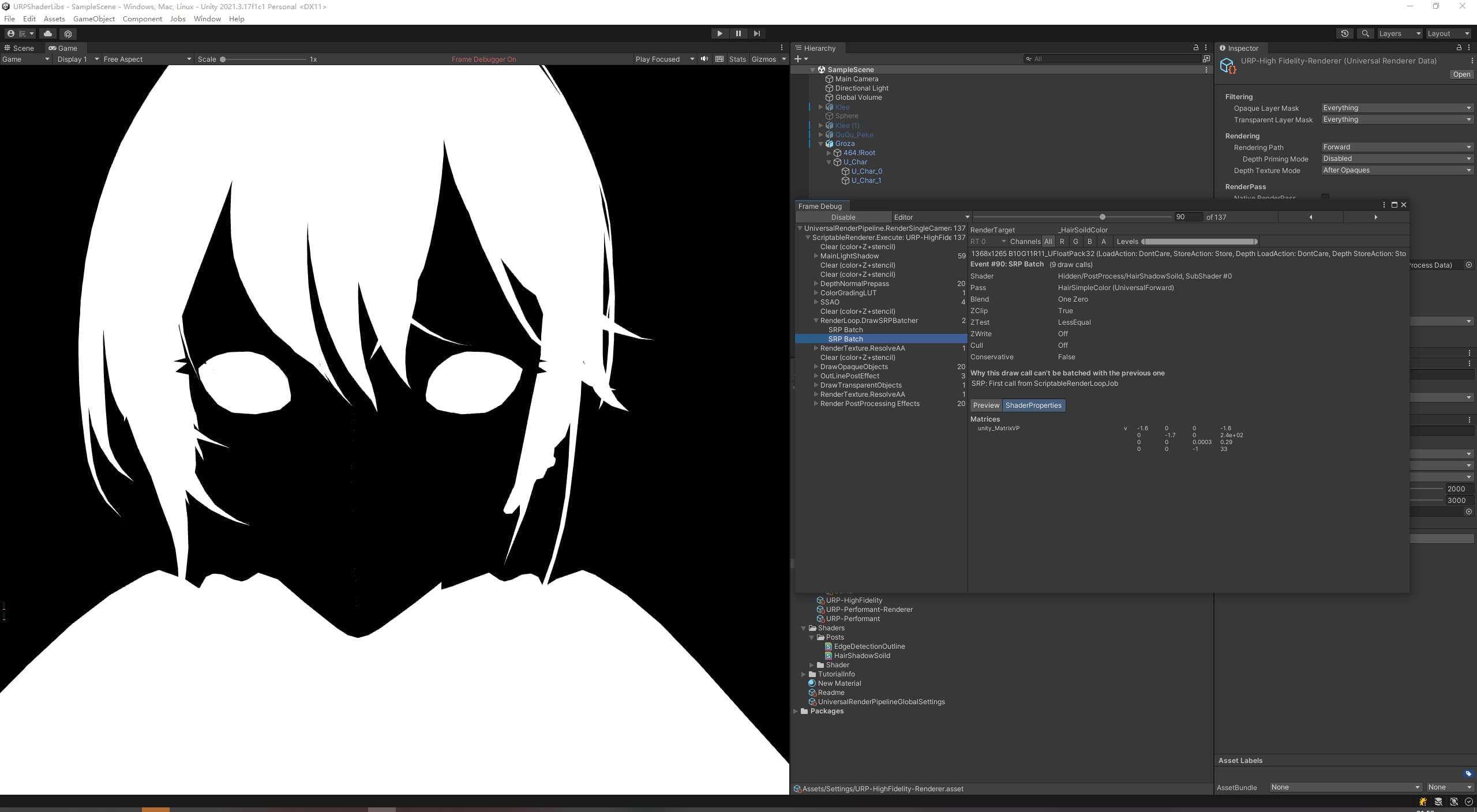

头发阴影

【Unity URP】以Render Feature实现卡通渲染中的刘海投影 - 流朔的文章 - 知乎

原理

- 使用RenderFeature生成纯色 Buffer

- 渲染脸部时,对这个纯色 Buffer 采样

ScriptableRendererFeature

1 |

|

Pass

1 |

|

Shader

1 | half3 ShadeSingleFaceLight(ToonSurfaceData surfaceData,ToonLightingData lightData,Light light,Varyings input){ |

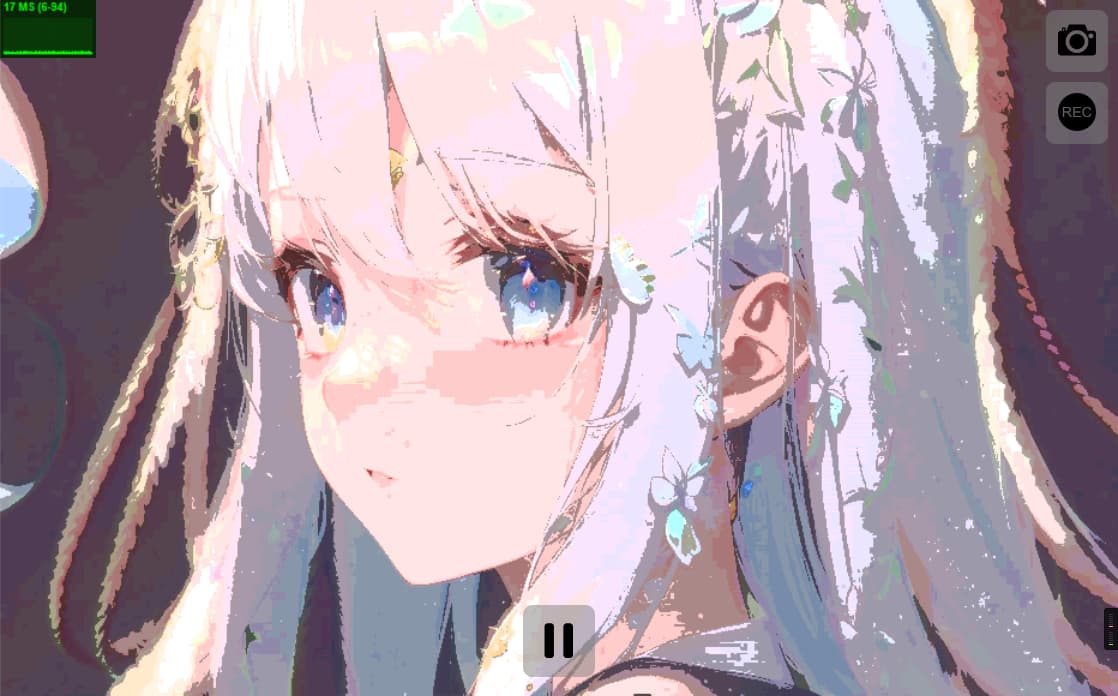

阶段结果

纯色遮罩结果

根据视角方向进行偏移采样脸部阴影贴图

最终结果

深度边缘光

描边

后处理描边

Tonemap

色调分离

可以用来做个数字油画的游戏

ShaderToy